In the winter of 2028, the lights in the western hemisphere didn’t go out all at once. They flickered—on screens, in minds, in institutions—until the world quietly realized it had entered a new kind of darkness. A darkness not of power, but of perception. Not of silence, but of signal. It was the year the global AI ecosystem cracked, and with it, the fabric of shared reality.

It began, as these things often do, with a chip.

TSMC, the titan of advanced silicon nestled in Taiwan, went offline. Whether it was a blockade, a cyberstrike, or internal sabotage remains classified, but the outcome was immediate: global production of 3nm and 5nm chips—the beating hearts of AI models—fell to zero. NVIDIA’s supply chain crumbled. Microsoft paused API provisioning. OpenAI’s servers went into emergency throttle.

They called it “The Stall.”

At first, it looked like a standard supply chain hiccup. Companies issued optimistic statements. Governments whispered about new CHIPS Act expansions. But underneath, panic spread. Most governments, businesses, and defense institutions had built their operational models on access to foundation models like GPT-5, Claude Ultra, and Gemini Prime. These systems were no longer luxuries—they were embedded cognition. The thinking scaffolding of modern civilization.

And now, that scaffolding was rotting.

Stargate: From Wonder to Wound

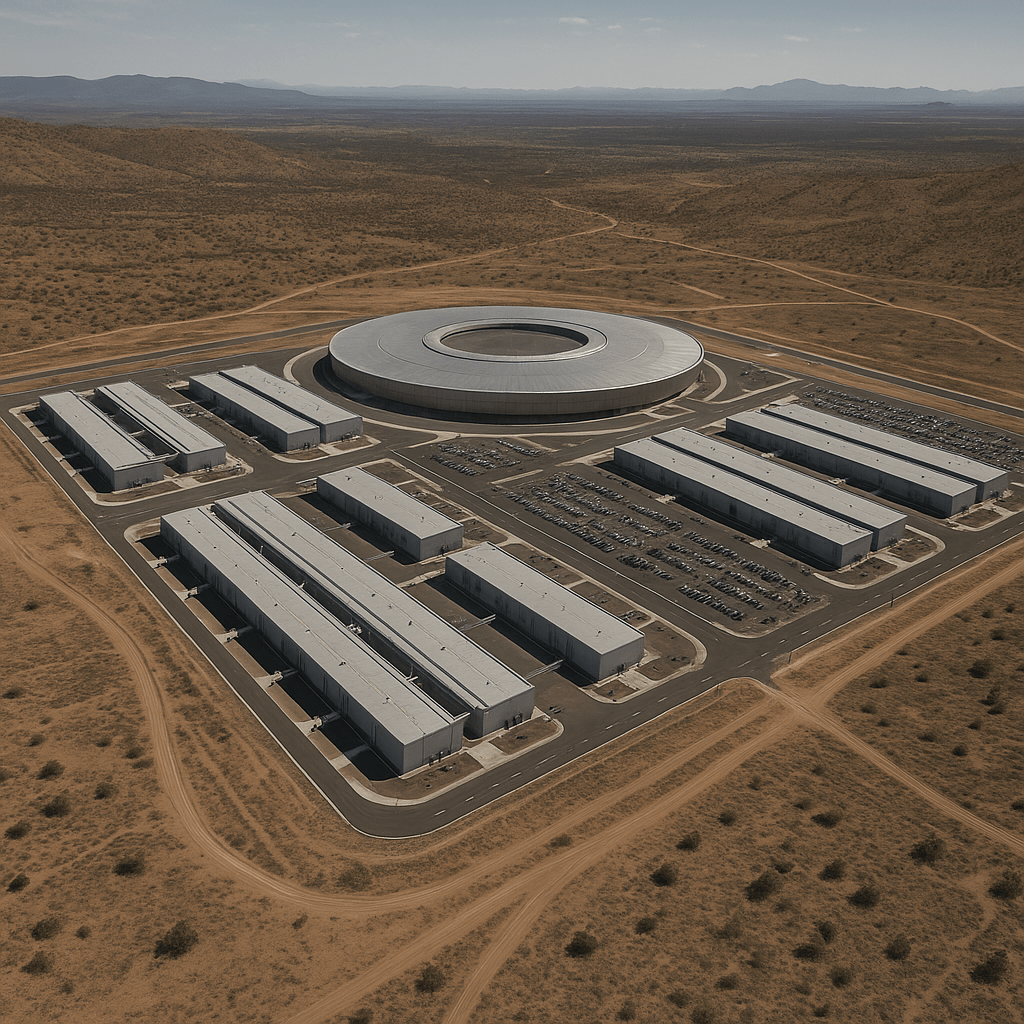

OpenAI’s $100 billion Stargate campus in the American Southwest had once been hailed as the “LHC of intelligence.” Backed by Microsoft, Oracle, and SoftBank, it was built to train the next generation of models, and more importantly, to dominate the energy-logic nexus: custom chips, liquid-cooled server towers, and dedicated solar fusion arrays.

In early 2028, Stargate went live.

Three weeks later, it became a strategic target.

No missile ever struck it. No drone breached the perimeter. But its very existence—its gravitational pull on silicon, talent, and policy—made it the most valuable digital asset on the planet. When the chips stopped flowing from Taiwan, Stargate became a dam with no reservoir.

And with that, the myth of abundance broke. For the first time in the AI era, compute was scarce.

The Azure Curtain Falls

Microsoft’s quiet evolution from infrastructure provider to epistemic governor had gone mostly unnoticed. Through Azure, they hosted OpenAI. Through their enterprise suite, they embedded AI into operating systems, defense apps, government portals. When the outage began, Microsoft throttled API calls to prioritize defense and critical infrastructure.

Overnight, startups died.

Next, research labs. Then hospitals. Then schools.

The dream of ubiquitous intelligence, of every human augmented by the cloud, had been built on a centralized cathedral. And now that cathedral’s steeple leaned under its own weight.

In the chaos, citizens turned to alternatives. Some turned inward: handcrafted prompts to run finetuned LLaMAs on salvaged GPUs. Others turned east.

The Red Cognitive Sphere

China’s AI system had grown in parallel, albeit with less fanfare. Cut off from NVIDIA after 2023, it built its own stack: Biren chips, Cambricon accelerators, Ernie and Wudao models trained on nationalized data lakes. Its model regulation system, derided as Orwellian in the West, now seemed eerily prescient.

When the West went dark, China lit up.

Through the Digital Silk Road, China offered its allies access to a functioning AI ecosystem: inference via satellites, sovereign LLMs aligned to local regimes, compute-sharing agreements tied to infrastructure debt relief. In exchange, partner nations adopted China’s epistemic filters—quietly embedding ideological priors in their digital assistants.

In a world without shared infrastructure, there was no longer shared reality.

The Epistemic Rift

The term “epistemic rift” had originated as a joke on X—formerly Twitter—comparing AI model divergence to the Great Schism of 1054. But it caught on. Because that’s exactly what it felt like.

By fall of 2028, OpenAI’s GPT models produced different answers from Google’s Gemini, which differed from Anthropic’s Claude. China’s Ernie produced entirely alternate interpretations of global history, law, and current events. Not because they were lying. But because their training data, fine-tuning objectives, and cultural alignment protocols had diverged irrevocably.

Students, analysts, and warfighters began to experience what strategists called cognitive dissonance drift: a gradual erosion of shared meaning. When language is mediated by proprietary models, and those models reflect strategic bias, then diplomacy, trade, deterrence—all begin to rot.

The world hadn’t just lost compute. It had lost consensus.

Black Markets, Ghost Models, and the Great Turn Inward

Desperation breeds ingenuity. Soon, darknet forums hosted auctions for flash drives containing black market weights: GPT-4, LLaMA 3.5, even rumored Chinese checkpoint dumps. Rogue nations began deploying zombie models trained on obsolete data—effective enough for internal control, dangerous enough to disrupt.

Amid the rubble, communities turned inward.

In Berlin, civic hackers built edge compute pods powered by recycled solar cells. In Montana, a coalition of veterans, educators, and librarians founded a “Model Monastery”—training small LLMs on local oral histories, academic texts, and community knowledge. In Lagos, students reverse-engineered bilingual models to preserve endangered languages.

A new ethos emerged: Think locally. Model lightly. Verify everything.

The dream of global AI gave way to the age of epistemic archipelagos—islands of meaning, partially connected, often in tension, sometimes at war.

A Futures Addendum: What Could Have Been

Some say it was inevitable. That complexity breeds collapse. That a system so interlocked—Nvidia relying on TSMC, OpenAI on Microsoft, all on grid power and proprietary data—was destined to fail.

But others remembered a roadmap that was never followed:

- Sovereign Compute Pools: Government-funded, decentralized GPU farms built to serve research, defense, and civic needs during peacetime and crisis.

- Open-Source Foundation Models: Publicly verifiable baselines, updated by academic consortia and governed through shared standards.

- National Data Trusts: Ethical, transparent, domain-specific datasets shared through accountable frameworks.

These were not utopian ideals. They were proposed. Costed. Piloted in corners of the EU, the U.S. NSF, the Indian Digital Stack. But they were never scaled. The market moved faster. The vision dimmed.

And so, when the cognitive storm came, there were no reserves.

Epilogue: Toward the Cognitive Reformation?

By the end of 2029, new alliances formed—not between states, but between communities of interpretation. A coalition of Indigenous technologists, military ethicists, open-source developers, and civic educators began drafting the Charter for the Cognitive Commons—a declaration of epistemic sovereignty and digital dignity.

“We hold that access to verified, transparent, and pluralistic cognition is a public good. No model shall have dominion over mind or memory without accountability.”

Perhaps this was the beginning of a renaissance. Or perhaps it was the final flicker before permanent fragmentation.

In the dimming light of 2028, the question was no longer “Who controls AI?”

It was: Who controls understanding itself?

Written in 2032 by an unnamed observer, trained on fragments, tuned on memory.