The age of intelligent machines demands more than technical adaptation; it requires a fundamental shift in how we think. For centuries, education, leadership, and professional preparation have been built on the premise that mastering knowledge equips one for action. But when machines can generate, synthesize, and automate knowledge faster than any human, knowledge itself ceases to be the decisive edge. What matters instead is judgment: the ability to question assumptions, navigate complexity, and choose wisely under conditions of deep uncertainty.

Two converging signals make this moment urgent. The first is technological: agentic artificial intelligence (AI) is on the cusp of automating wide swaths of cognitive labor, the very work once thought uniquely human. The second is strategic: leaders across multiple domains—military, political, economic—are calling for sharper focus on the near-future fight, where technological acceleration and geopolitical rivalry intersect. Together, these signals reveal that the challenge is not simply learning new tools or doctrines. It is cultivating a new way of thinking—one that equips individuals and organizations to anticipate disruption, imagine alternative futures, and exercise moral agency where machines cannot.

Futures literacy offers such a way of thinking. It is more than forecasting or planning; it is a discipline of rehearsing the future—using multiple imagined scenarios to expose hidden assumptions, expand the range of possibilities, and reframe the present. Coupled with the deliberate cultivation of metacognition, moral agency, and strategic foresight, it becomes a philosophy for thriving in the human-machine era. The goal is not to fill minds with more information, but to shape the capacity to think differently, act wisely, and decide courageously in worlds that do not yet exist.

1. The Twin Signals of Disruption

1.1 The Amodei Signal: Automation of the Cognitive Ladder

For decades, automation was imagined as a threat to blue-collar labor. Robots displaced factory workers, algorithms managed logistics, and assembly lines became increasingly mechanized. White-collar knowledge work—law, finance, consulting, analysis—seemed secure, grounded in expertise, judgment, and the subtlety of human reasoning. That assumption no longer holds.

Dario Amodei, CEO of Anthropic and one of the leading architects of frontier AI, has warned that agentic AI systems are poised to eliminate between 10–20 percent of white-collar jobs within five years, with some forecasts predicting up to 50 percent of entry-level roles disappearing. This is not speculation about 2050. It is an alarm for the near future—the years between now and 2030.

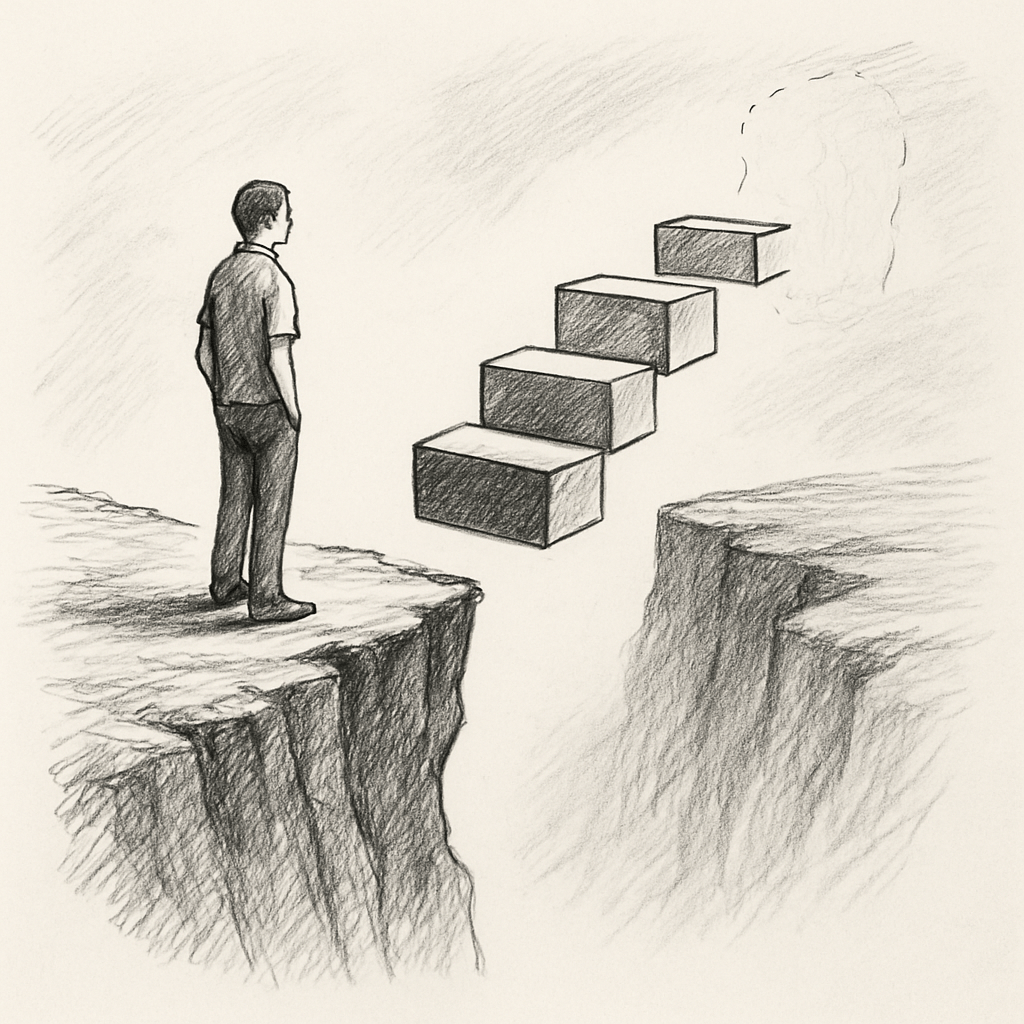

The key shift is from augmentation to automation. For the past several years, AI has been framed as a helpful assistant: drafting memos, summarizing documents, or accelerating research. But Amodei and others emphasize that the inflection point is near, where entire workflows—grant proposals, market analyses, operational plans—are executed end-to-end by machines, “instantly, indefinitely, and exponentially cheaper.” The bottom rungs of the cognitive career ladder, the training ground where junior professionals once learned their craft, are crumbling.

The implications for human development are profound. If early roles disappear, how will individuals acquire the tacit knowledge and judgment once learned by doing? And if machines can execute cognitive labor at scale, what, exactly, remains uniquely human?

1.2 The Strategic Mandate: Preparing for the Near-Future Fight

Alongside technological disruption comes a second, equally pressing signal: the demand that leaders reorient their thinking toward the near-future fight. Across defense and national security circles, the call is clear: stop preparing for yesterday’s conflicts, and focus on the rapidly approaching battlespace of tomorrow—defined by great power competition, technological rivalry, and contested domains.

This mandate reflects a recognition that the character of conflict is shifting faster than institutions can adapt. Unmanned systems, cyber operations, cognitive warfare, and space denial all point to futures where legacy assumptions about power and victory no longer apply. The fight ahead will not wait for institutions to catch up.

Together, these two signals—the Amodei alarm about automation of cognition and the strategic demand to prepare for disruption—create an existential imperative. Incremental adaptation will not suffice. A new way of thinking is required.

2. Beyond Knowledge: Why Thinking Must Change

Knowledge has long been the currency of expertise. The educated professional distinguished themselves by command of facts, mastery of doctrine, or ability to produce cogent analysis. But if AI can generate that same output in seconds, faster and often more accurately than humans, then knowledge alone is no longer decisive.

What remains uniquely human is judgment. Unlike knowledge, which can be codified and automated, judgment is situational, relational, and ethical. It requires perspective, self-awareness, and the capacity to weigh competing values under conditions of uncertainty.

This is why the future of thinking must be defined not by accumulation of knowledge but by cultivation of three interdependent capabilities:

- Metacognition – the ability to think about one’s own thinking, to recognize biases, and to adjust in real time.

- Moral Agency – the courage and clarity to take responsibility for decisions, especially when machines suggest otherwise.

- Strategic Foresight – the capacity to anticipate, imagine, and prepare for futures that may never resemble the present.

Together, these capabilities form a human edge that no machine can replicate. But cultivating them requires more than traditional education; it requires a philosophy of futures literacy.

3. Futures Literacy: Rehearsing the Future

3.1 Defining the Discipline

Futures literacy, as defined by UNESCO, is the capacity to understand how the future shapes what we see and do in the present. It is not about prediction. It is about imagination: learning to use multiple possible futures as lenses to reframe today’s choices.

The practice begins with a recognition that the future does not exist—it can only be imagined. By deliberately rehearsing multiple scenarios, individuals reveal the hidden assumptions that shape their thinking. This reframing transforms uncertainty from a threat into a source of creativity.

3.2 From Forecasting to Reframing

Traditional planning focuses on extrapolating trends to predict likely outcomes. Futures literacy deliberately expands beyond the probable to include the possible and even the unthinkable. Its value lies not in accuracy but in perspective.

The central question shifts from What will happen? to What assumptions am I making, and what happens when they no longer hold? In an era where AI may erase entire categories of work, such reframing is essential.

3.3 Three Futures to Rehearse

To illustrate, consider three divergent futures shaped by AI disruption:

- The Optimistic Agent: AI augments human work, freeing people to focus on creativity, ethics, and strategy. Judgment becomes the premium skill.

- The Bloodbath Bureaucracy: AI eliminates vast swaths of white-collar jobs. Institutions face budget cuts and legitimacy crises. Survival depends on proving irreducible human value.

- The Parallel Future: Civilian platforms absorb routine cognitive tasks; only high-level leadership, judgment, and integration remain distinctly human.

These scenarios are not predictions. They are rehearsals. By inhabiting each one, individuals and organizations test their resilience, question their assumptions, and identify what skills endure across all futures.

4. The Human Triad: Metacognition, Moral Agency, and Foresight

4.1 Metacognition: Thinking About Thinking

Metacognition is the foundation of adaptive judgment. It is the practice of noticing one’s own cognitive processes—biases, shortcuts, blind spots—and adjusting them. In uncertain environments, leaders without metacognition fall prey to automation bias, overconfidence, or rigid thinking. Those with it can pivot, reframe, and adapt.

Military and corporate experiments already highlight the power of metacognitive training: reflective exercises, systems thinking models, and structured questioning that reveal not just what we think, but how. In an age of machine outputs, the ability to interrogate one’s own reasoning becomes indispensable.

4.2 Moral Agency: The Ethical Core

AI promises speed and efficiency, but it also creates accountability gaps. When an autonomous system contributes to harm, who bears responsibility—the human operator, the machine’s designer, or the institution deploying it?

Compounding this is automation bias: the human tendency to trust machine outputs, even when flawed. Left unchecked, this can erode moral responsibility, reducing life-and-death decisions to algorithmic suggestions.

Moral agency resists this erosion. It is the capacity to take responsibility, to exercise meaningful human control, and to act with courage when machines suggest otherwise. In the human-machine era, moral agency is not ancillary; it is the essence of leadership.

4.3 Strategic Foresight: Anticipating the Unthinkable

Foresight is the systematic discipline of imagining and preparing for multiple futures. Horizon scanning, scenario development, and wargaming are tools, but the underlying mindset is anticipatory: expecting disruption, rehearsing discontinuity, and remaining flexible.

AI itself can become a tool for foresight—powering simulations that respond dynamically to human choices, creating adaptive environments for practicing strategy. But the point is not technological. It is human: foresight trains the imagination to see beyond the present, to prepare not for certainty but for surprise.

5. Rehearsing Discontinuity: Living with the Future

To cultivate this triad, futures literacy must be more than a concept. It must become a lived practice: regularly rehearsing discontinuity, inhabiting multiple futures, and learning to see the present differently.

Consider a futures literacy exercise: participants are told their profession will vanish in three years due to automation. What skills remain valuable? What assumptions collapse? How would they reinvent themselves? The value lies not in accuracy but in the jolt—the reframing that reveals hidden dependencies and opens space for creativity.

Living with futures means treating uncertainty not as paralyzing but as normal. It means developing comfort with paradox, resilience in ambiguity, and the ability to navigate without a map.

6. Toward a New Way of Thinking

The convergence of AI disruption and strategic urgency demands more than institutional reform. It demands a philosophy of thinking that privileges judgment over knowledge, imagination over prediction, and moral courage over efficiency.

Futures literacy offers such a philosophy. It teaches us to rehearse rather than predict, to reframe rather than extrapolate, to cultivate the uniquely human edge. Coupled with metacognition, moral agency, and foresight, it equips leaders and thinkers to thrive in an era where machines may know more but will never care, never choose, never bear responsibility.

The decisive advantage of the future will not belong to those who know the most, but to those who can think differently.

In The End: The Human Edge in the Age of Machines

The future cannot be predicted, but it can be rehearsed. Agentic AI will continue to expand, automating cognitive labor and challenging the foundations of knowledge-based expertise. Strategic rivalry will intensify, demanding agility and resilience in the face of disruptive change.

In this environment, the question is not whether disruption will come, but whether we will be ready to think differently when it does. Futures literacy offers a path: a discipline of imagination that reveals assumptions, rehearses alternatives, and reframes the present. When joined with metacognition, moral agency, and foresight, it becomes more than a skillset—it becomes a way of being.

To rehearse the future is to refuse paralysis. It is to recognize that the human edge lies not in what we know, but in how we judge, decide, and imagine. Machines may calculate. Only humans can care. The challenge, then, is not to resist the future but to build the habits of thought that allow us to meet it with clarity, courage, and creativity.

The future fight will be as much intellectual as it is material. To prepare for it is not to memorize more knowledge, but to cultivate the way of thinking that keeps humanity decisive in the age of intelligent machines.